1. Introduction: Data Quality Is the Foundation of AI

Discover data quality free AI analysis. Learn how to identify common data issues, implement best practices, and equip yourself with strategies for reliable AI outputs in 2025.

Any free AI tool—chatbots, analytics, or stock pickers—relies on data as its fuel. But what happens when that fuel is contaminated? Outputs become unreliable, insights flawed, and decisions risk-prone. As AI adoption grows, data quality is no longer optional—it’s fundamental. This guide examines:

Why data quality matters

Real-world risks of poor data

Detection and cleaning strategies

Best practices and tools

How to leverage clean data for free AI

Let’s dive in.

2. Why Data Quality Matters in AI Analysis

2.1 Accurate Insights & Reliable Predictions

Quality data ensures that AI outputs are precise and dependable—vital for finance, marketing, or decision support

2.2 Enhanced Model Performance

Garbage in, garbage out—or GIGO—is real. Machine learning models thrive on clean, representative data. Studies show even minor noise can drastically reduce model accuracy.

2.3 Reduced Bias & Fairness

Clean data reduces the risk of embedded bias. Diverse, accurate datasets produce more equitable outcomes .

2.4 Increased Trust & Adoption

You’re more likely to rely on AI tools that consistently perform well—trust breeds usage.

Studies highlight low trust when AI produces inconsistent or inaccurate insights

3. Common Data Problems That Derail AI

3.1 Inaccuracy & Incorrect Formatting

Typos, misaligned dates, and incorrect units undermine analysis.

3.2 Missing, Duplicate, or Incomplete Values

Absent data skews results, especially in forecasting models.

3.3 Inconsistent Representation

Variations like “USD” vs “$” or “NY” vs “New York” confuse algorithms.

3.4 Outliers and Anomalies

Units recorded centrally may distort model outputs up to 30%

3.5 Latency & Staleness

Old, outdated data fails to reflect current trends, reducing AI effectiveness.

4. Real‑world Examples of Data Quality Failures

4.1 AI Hallucinations & Factual Errors

A recent study found large language models regularly hallucinate or provide false info under pressure

4.2 Financial AI Mishaps

Investor tools (e.g.,Robinhood, Public) analyze filings and earnings calls—but hallucinations in input metadata can mislabel sentiment or signal false alerts.

4.3 IoT and Government Data Misreports

India’s Chief Economic Advisor emphasized how poor-quality sensor data can derail policymaking

5. Step-by-Step: Assessing & Improving Data Quality

5.1 Define Data Quality Metrics

Track accuracy, completeness, consistency, timeliness, and relevance .

5.2 Use Profiling Tools

Scan datasets to detect missing values and anomalies (e.g., Talend, Trifacta, Apache Griffin) .

5.3 Clean & Normalize

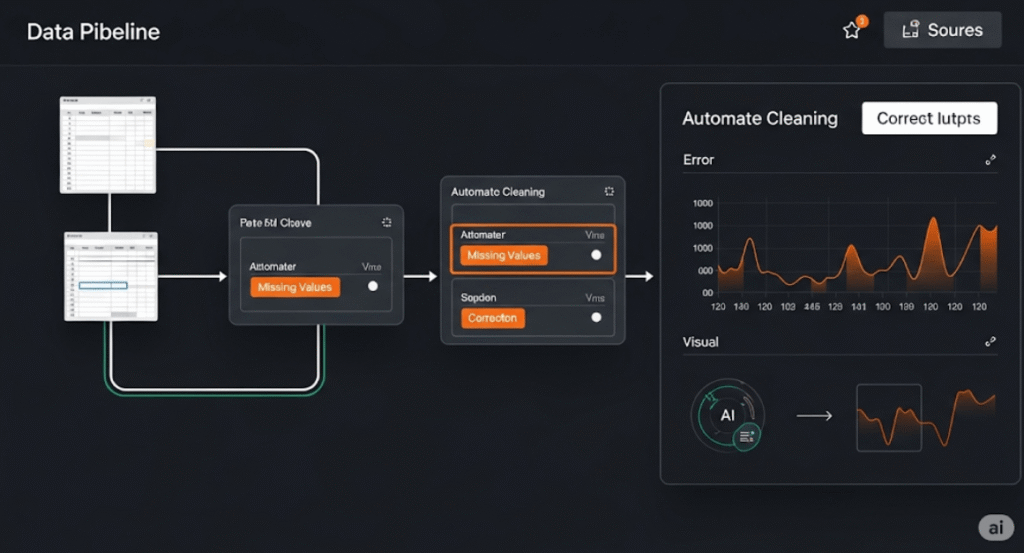

Use automated tools to standardize formats, fill gaps, and dedupe records.

5.4 Version Control

Capture snapshots of datasets—essential for reproducibility and audits .

5.5 Continuous Monitoring

Set up observability pipelines (e.g., Monte Carlo, Acceldata) to flag quality gaps .

6. AI‑Driven Tools for Data Quality & Cleaning

| Tool | Capability |

|---|---|

| Talend | Cleansing, auditing, workflow management |

| Trifacta | ML-assisted data transformation |

| Apache Griffin | Quality monitoring for large datasets |

| Monte Carlo | Observability pipelines for anomalies |

| Informatica IDQ | Enterprise-grade validation and governance |

| OpenRefine | Free tool to reshape and dedupe data |

AI features include auto-format detection, smart normalization, anomaly detection, and dynamic profiling.

7. Best Practices for Reliable AI Outputs

Start with clear quality goals — know what you expect from your data.

Document data provenance — track data origins and processing steps.

Automate cleaning pipelines — reduce human error and scale.

Apply version control and reproducibility — essential for trusting results .

Include governances and permissions — clear ownership and responsibility .

Cross-check outcomes — validate against external benchmarks or alternative tools.

8. Integrating Data Quality into Free AI Tool Use

8.1 ChatGPT/Text Analysis

Always clean text inputs—remove artifacts or odd symbols to reduce hallucinations .

8.2 Free Stock Tools

Validate financial, ticker, and date inputs manually to avoid mis-read signals.

8.3 Image/Text OCR

Standardize scanned documents before feeding into free OCR models for accuracy.

9. Long-Term Impact & Business Benefits

📈 Better insights lead to improved ROI

⚡ Faster deployment and trust in AI tools

💰 Cost savings by reducing manual checks

🌐 Scalability across Teams and Departments

🚀 Competitive advantage via sound decision-making

Major organizations report 20%+ gains from controlled data environments .

10. The Future: Why Data Quality Will Matter Even More

✔️ Growing reliance on generative and LLMs means data noise is amplified

✔️ Expanded governance demands from regulators

✔️ Evolving “data fabrics” in open-source frameworks

✔️ Shift toward synthetic data—require strict oversight to avoid “slop”

✅ Conclusion: Don’t Skip Data Prep

Free AI tools are powerful—but only when fed with accurate, clean, reliable data. By investing a little effort in quality—from automated pipelines to version control and observability—you unlock the difference between hype and high performance. Armed with good data, free AI becomes a trusted ally—delivering real, actionable insight.

Pingback: Protecting Your Information: Security with Free AI Tools | 2025 Safe AI Guide - Trade Pluse Ai